Introduction

The number of unmanned systems in military inventories has grown rapidly and is still increasing throughout all domains. At the same time, the level of automation built into these unmanned systems has not only increased significantly, but has also reached a level of sophistication at which they are seemingly capable of performing many tasks ‘autonomously’ and with no necessity for direct human supervision. Highly automated air defence systems are capable of firing at incoming targets automatically, within seconds of detection of a target, assuming this mode of operation has been activated. Basically, everything necessary to build a fully automated weapon system is already developed. The respective technologies merely have to be brought together.

For example, a future unmanned combat aircraft may combine current autopilot, navigation and sensor technology with software modules for air combat and target identification and may carry guided munitions for a kinetic engagement.

The autopilot would enable the aircraft to not only navigate to its pre-planned mission area but also calculate the route on its own, taking all available data into account (e.g. meteorological information or intelligence about adversary threats). This data could be updated in real time during flight or gathered by on-board sensors, enabling the autopilot to immediately adapt to new conditions. In combat, the aircraft would defend itself or engage adversary targets on its own. Its air combat software module could predict possible adversary actions almost instantaneously and initiate appropriate manoeuvres accordingly, potentially giving it superiority over any manned aircraft and making it capable of surviving even the most hostile environments. The sensor suite would provide the autopilot and the combat software module with comprehensive situational awareness, enabling the weapon system to identify enemy vehicles and their trajectories and compute combat manoeuvres accordingly. Finally, a mission tailored set of lethal payloads would enable the unmanned aircraft to conduct combat operations and engage targets autonomously.

All of the aforementioned technology required to build a fully automated weapon system is already developed and readily available on the market. So the question is no longer if such systems can or should be built. The real question is, when these systems come into service, what missions will be assigned to them and what implications will arise from that development?

The Problem with Autonomy in Weapon Systems

In the civil arena, the use of highly automated robotic systems is already quite common, as seen in the manufacturing sector. But what is commonly accepted in the civilian community may be a significant challenge when applied to military weapon systems. A fully automated or ‘autonomous’ manufacturing robot, which does not make decisions about the life or death of human beings, will most likely not raise the same legal questions, if any, that a military weapon system would.

Any application of military force in armed conflict is usually governed by International Humanitarian Law (IHL) which itself derives from, and reflects, the ethically acceptable means and customs of war. However, IHL has been altered and amended over time, taking both the development of human ethics and weaponry into account. For example, IHL has been modified to condemn the use of certain types of weapons and methods of warfare.

The proliferation of unmanned systems, and especially the increasing automation in this domain, have already generated a lot of discussion about their use. The deployment of autonomous systems may entail a paradigm shift and a major qualitative change in the conduct of hostilities. It may also raise a range of fundamental legal and ethical issues to be considered before such systems are developed or deployed.

Autonomous Weapon Systems in International Humanitarian Law

International Humanitarian Law, as yet, provides no dedicated principles with respect to autonomous weapons. Because of this, some argue that autonomous weapons are to be considered illegal and should be banned for military applications. However, it is a general principle of law that prohibitions have to be clearly stated or otherwise do not apply. Conclusively, the aforementioned argument for banning these particular weapons is inappropriate. Nevertheless, IHL states that if a specific issue is not covered by a dedicated arrangement then general principles of established customs, such as the principle of humanity and public conscience, apply.

Consequently, there is no loophole in international law regarding the use of autonomous weapons. New technologies have to be judged against established principles before labelling them illegal in principle. Therefore, an autonomous weapon system which meets the requirements of the principles of IHL may be perfectly legal.

The Principles of International Humanitarian Law

During armed conflict the IHL’s principles of distinction, proportionality and precaution apply. This also implies the obligation for states to review their weapons to confirm they are in line with these principles. In general, this does not impose a prohibition on any specific weapon. In fact, it accepts any weapon, means or method of warfare unless it violates international law and it puts responsibility on the states to determine if its use is prohibited. Therefore, autonomous systems cannot be classified as unlawful as such. Like any other weapon, means or method of warfare, it has to be reviewed with respect to the rules and principles codified in international law.

Prohibited Weapons. First and foremost, any weapon has to meet the requirements of the Geneva Conventions which state: ‘It is prohibited to employ weapons, projectiles and material and methods of warfare of a nature to cause superfluous injury or unnecessary suffering … [and] … are intended, or may be expected, to cause widespread, long-term and severe damage to the natural environment.’ Some examples of internationally agreed prohibitions on weapons include fragmentation projectiles, of which the fragments cannot be traced by X-rays, and incendiary weapons’ use in inhabited areas. Autonomous weapons respecting these prohibitions will be well in line with that article.

The Principle of Distinction. Protecting civilians from the effects of war is one of the primary principles of IHL and has been agreed state practice dating back centuries. In 1977, this principle was formally codified as follows: ‘[…] the Parties to the conflict shall at all times distinguish between the civilian population and combatants and between civilian objects and military objectives and accordingly shall direct their operations only against military objectives.’ However, applying this principle turned out to be more and more complex as the methods of warfare have evolved. Today’s conflicts are no longer fought between two armies confronting each other on a dedicated battlefield. Participants in a contemporary armed conflict might not wear uniforms or any distinctive emblem at all, making them almost indistinguishable from the civilian population. So, the distinction between civilians and combatants can no longer be exercised only by visual means. The person’s behaviour and actions on the battlefield have become a highly important distinctive factor as well. Therefore, an autonomous weapon must be capable of recognizing and analysing a person’s behaviour and determining if he or she takes part in the hostilities. However, whether a person is directly participating in hostilities or not is not always that clear. An autonomous weapon will have to undergo extensive testing and will have to prove that it can reliably distinguish combatants from civilians. However, even humans are not without error and it has to be further assessed how much, if any, probability of error would be acceptable.

The Principle of Proportionality. Use of military force should always be proportionate to the anticipated military advantage. This principle has evolved alongside the technological capabilities of the time. For example, carpet bombing of cities inhabited by civilians was a common military practice in World War II, but would be considered completely disproportionate today. Modern guided ammunition is capable of hitting targets with so called ‘surgical’ precision, and advanced software, used in preparation of the attack, can calculate the weapon’s blast and fragmentation radius and anticipated collateral damage. Especially for the latter, it can be argued that autonomous weapons could potentially apply military force more proportionately than humans. This is because they are capable of calculating highly complex weapon effects in an instant and therefore reducing the probability, type and severity of collateral damage. However, adhering to the principle of proportionality is completely dependent on reliably identifying and distinguishing every person and object in the respective target area. And this, ultimately, refers back to the application of the principle of distinction.

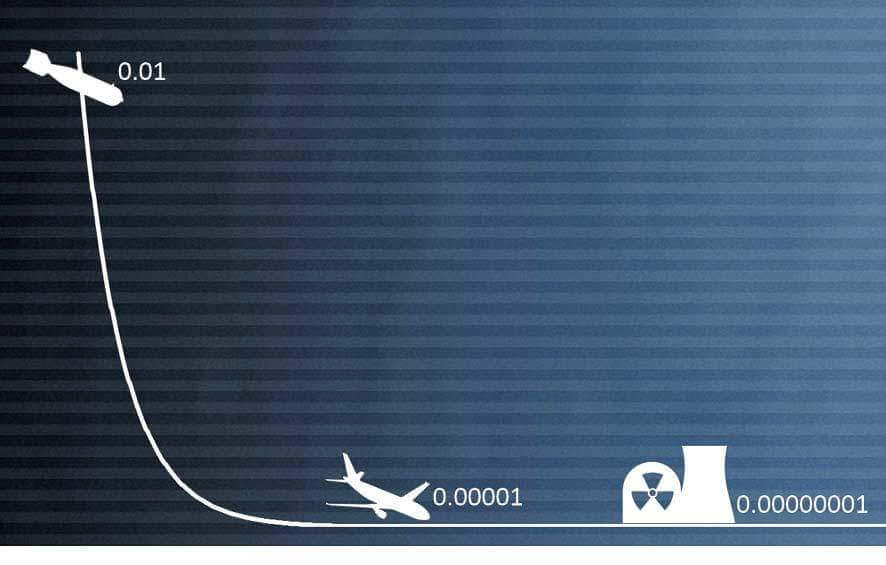

The Principle of Precaution. The obligation of states to take all feasible precautions to avoid, and in any event to minimize, incidental loss of civilian life, injury to civilians and damage to civilian objects inherently requires respect for the aforementioned principles of distinction and proportionality. Additionally, the principle of precaution has to be respected during the initial development of a weapon itself. Any type of weapon has to demonstrate the reliability to stay within the limits of an acceptable failure rate, as no current technology is perfectly free of errors. For example, the United States Congress defined the acceptable failure rate for their cluster munitions as less than one percent. Recent general aviation accident rates in the United States are only a fraction compared to that and even nuclear power plants cannot guarantee 100 percent reliability. It is doubtful that any type of future technology would ever accomplish an error level of zero, which is also true for any autonomous weapon. This again raises the question ‘how much probability of error would be acceptable?’ and ‘how good is good enough?’ Weapon development and experimentation must therefore provide sufficient evidence to reasonably predict an autonomous weapon’s behaviour and effects on the battlefield.

Responsibilities

The higher the degree of automation, and the lower the level of human interaction, the more the questions arise as to who is actually responsible for actions conducted by an autonomous weapon. This question is most relevant if lethal capabilities cause civilian harm, be it incidentally or intentionally. Who will be held liable for a criminal act if IHL has been violated? Is it the military commander, the system operator, or even the programmer of the software?

Military Commander. Military commanders have the responsibility to prevent and, where necessary, to take disciplinary or judicial action, if they are aware that subordinates or other persons under their control are going to commit or have committed a breach of IHL. Military commanders are, of course, also responsible for unlawful orders given to their subordinates. This responsibility does not change when authorizing the use of an autonomous weapon. If a commander was aware in advance of the potential for unlawful actions by an autonomous weapon, and still wilfully deployed it, he would likely be held liable. In contrast, if weapon experimentation and testing provided sufficient evidence that the autonomous weapon can be trusted to respect IHL, a commander would likely not be accountable.

System Operator. Depending on the level of human interaction, if required, the individual responsibility of the system operator may vary. However, some already fielded autonomous systems such as Phalanx or Sea Horse can operate in a mode where the human operator has only a limited timeframe to stop the system from automatically releasing its weapons if a potential threat has been detected. Attributing liability to the operator is doubtful if the timeframe between alert and weapon release is not sufficient to manually verify if the detected threat is real and if engagement of the computed target would be lawful under IHL.

Programmer. Software has a key role in many of today’s automated systems. Hence, the programmer may be predominantly attributed responsibility for an autonomous weapon’s behaviour and actions. However, modern software applications show clearly that the more complex the programme the higher the potential of software ‘bugs’. Large software undertakings are typically developed and modified by a large team of programmers and each individual has only limited understanding of the software in its entirety. Furthermore, it is doubtful if the individual programmer could predict, in detail, any potential interaction between his portion of the source code and the rest of the software. So, holding an individual person liable for software weaknesses is probably not feasible unless intentionally erroneous programming is in evidenced.

Conclusions

International law does not explicitly address manually operated, automated or even autonomous weapons. Consequently, there is no legal difference between these weapons. Regardless of the presence or absence of direct human control, any weapon and its use in an armed conflict has to comply with the principles and rules of IHL. Therefore, autonomous weapons cannot simply be labelled unlawful or illegal. In fact, they may be perfectly legal if they are capable of adhering to the principles and rules of IHL.

The principles of International Humanitarian Law are predominantly the ones of distinction, proportionality and precaution. None of them can be looked at in isolation as they are all interwoven and require each other to protect civilians and civilian objects during the conduct of hostilities. The technical requirements for an autonomous weapon system to adhere to these principles are extremely high, especially if it is intended to operate in a complex environment. However, considering the current speed of technological advances in computer and sensor technology it appears likely that these requirements may be fulfilled in the not so distant future.

Nevertheless, not even the most sophisticated computer system can be expected to be perfectly flawless (cf. Figure 1). Consequently, potential erroneous system behaviour has to be an integral part of the review process, and, most importantly, the acceptable probability of error needs to be defined.